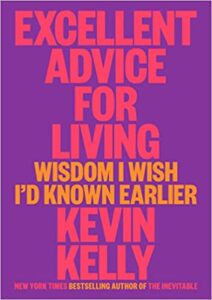

| 0:37 | Intro. [Recording date: February 22, 2023.] Russ Roberts: Today is February 22nd, 2023 and my guest is Kevin Kelly. Kevin is an author, a photographer, a visionary--he's a lot of things, all them interesting. This is Kevin's 5th appearance on EconTalk. He was last here in June of 2016 talking about his book The Inevitable. His latest book is Excellent Advice for Living: Wisdom I Wish I'd Known Earlier, which is our topic for today. Although I expect we'll get into many, many other things. Kevin, welcome back to EconTalk. Kevin Kelly: It's always a pleasure to be here, to chat with you, and I appreciate your time sharing with me. |

| 1:17 | Russ Roberts: Now, your book is a collection of aphorisms, short bits of wisdom, insights, advice. And, I want to start with the first one, which could be the motto for this program. Here's the first one. 'Learn how to learn from those you disagree with or even offend you. See if you can find the truth in what they believe.' That's what you wrote. Now, I believe this with all my heart, but is it advice anyone really wants? And, can anyone really implement it? And, can you give us some thoughts on how to implement it? I think if you ask people, 'Do you agree with this?' 'Oh, absolutely.' 'Do you do anything about it?' 'Absolutely not.' So, I'm curious if you have any thoughts on implementation. Kevin Kelly: Well, I think you're right. I think everybody aspires to this, and I think it is difficult. So, first of all, these aphorisms were kind of written for me. I tried to take a whole book of advice and try to reduce it down to one memorable thing that I could repeat to myself to constantly remind myself of what to do. Only later on did I want to pass it on to my kids. So, I try to remember this myself when I'm meeting people are hearing things. And so, I try--there are people that I follow on Twitter that I don't agree with, and I'm, like, 'Okay. Other people respect them. I need to hear what they're saying.' I also go to a webpage called Now Upstart, where you get the entire internet and all the news all at once. And, it has Drudge Report and it has Breitbart and it has all the kind of sources that I am really not--I don't have a high respect for, but I want to hear what they're saying. And so, every day I see the headlines and see what they're reporting on and how they're reporting on. So, I do make some attempt to try and understand positions that I don't understand because some people seem to respect it and understand it, and it's like, 'What am I missing?' And, I might be reminded that, 'Okay, I see where we diverge and I don't agree with it.' But, I am trying hard as I can without sort of occupying my entire life doing it to hear opinions that I don't agree with, to see if I can change my mind. Because, I get a thrill out of changing my mind. Russ Roberts: Yeah, I agree with that. But, it's an acquired taste, I think--as I've mentioned many times on the program--to say, 'I don't know.' It took me a long time to say it at all. It took me a long time to enjoy saying it. And, I know people who, when I ask them if they've heard of something, they can't say no, I know they haven't heard of it. And, they kind of go, 'I think maybe,' and I'm thinking, 'You never heard of it. It's okay, just say it. It's okay.' But, the question I have is that--I mean, is this good advice in the sense that, well, I think the reason think this kind of experience or advice is not often taken is because it's not so fun. And, certainly at first. Right? To be told, to imagine what your intellectual opponents say--here are many ways aspects of this--but to imagine your intellectual opponents might be right. That's no fun. So, I'm just suggesting, I think this is advice that's hard to accept and it does often lead to discomfort, which is a better way of making the point. And, that you and I think discomfort is good. Most people don't, I don't think. Kevin Kelly: I in part travel a lot to embrace some discomfort. I think some discomfort is part of what good travel is about. You're actually putting yourself out, you're leaving things behind, and you're having some discomfort. And, that discomfort is good because it's forcing you to look differently, to think differently, to reevaluate what it is that you think is important. And, I think that is--there's another piece of advice in the book, which is that, for certain, there are things that I believe very strongly: that my descendants will be embarrassed by me and my belief. And so, I'm always wondering what is it that I'm totally wrong about? And, I really want to know, because I don't want to be wrong. And so, I'm kind of checking in to see what is it that I believe that will be embarrassing in the future. And, it's very hard to tell because obviously I'm not believing in things that I think are wrong. And so, it could be anything. It could be that I think this advice is true and it's all wrong. That would be very embarrassing. But, there's something that I'm getting totally wrong, and I would like to know about that. |

| 6:39 | Russ Roberts: Well, let's talk about the power of clichés. Some of these--some of the aphorisms are things like, 'Oh, of course. Yeah, I know that.' And, others are surprising; and others, as you say, might not be right. But, when we think about a focused piece of insight, I'm going to give you one that you gave in the book, 'If you mess up, 'fess up.' And, it has a few other sentences around it. But, I'm going to remember, 'If you mess up, 'fess up.' And, I'm wondering if you might talk about the role that a mantra has in habit formation. That's really what you're talking about. That, when you come to a decision or a crisis or a moment of anger, what you can, quote, "say to yourself"-- can one of these expressions come to mind? You say you wrote them for yourself. I have my own. I have a bunch of these, of course. I think we all do. And, I want you to talk about whether that's a good idea--whether people listening should try to acquire these. They're a little like poetry: things you memorize to hold onto and have, and you bring them out--if you think enough about them, you'll bring them out without having to ponder them. They will pop into your head and maybe prevent you from making a terrible mistake. Kevin Kelly: Yeah, that's exactly what they're for. They are to inculcate little tiny habits. And, those little habits can be grown into larger habits. And, a lot of it is very practical things. So, one of my pieces of advice that I, again, repeat to myself, or I condensed so that I could repeat to myself was, when I can't find something in my house that I know that I have, and I finally find it, I say to myself, 'Don't put it back where I found it. Put it back where I first looked for it.' And, I always tell myself that, 'Oh right, no, no, don't put it back--put it back where I looked for it.' And, that's much, much better. And, I can find it again very fast. Because I think about it and that's where it is. So, it's a little story I tell myself and it works. And, another little example would be when there's a controversy about two sides, black and white--two sides--I always say, 'What is the third side?' There's some third side to it that triangulation can break that dilemma and make it easier to see maybe there's a solution in there. And so, it's, like, 'Okay, two people with this side; there's a third side, where is it?' I ask myself, 'When there's two sides, where is the third side?' So, I'd say that to myself when I confront those. And, that's the kind of practical proverb, maxim, adage that I'm trying to make something memorable. I mean, 'If you mess up, 'fess up,' that's something you can remember. Russ Roberts: Another one I love, which is also one of mine; I'd literally say this to my children at times: 'Listening is a superpower.' It's hard to remember because we like to talk, but listening is a superpower and you add to it, a really interesting addition. You say, 'Listening is a superpower. While listening to someone you love, keep asking them: Is there more? until there is no more.' Kevin Kelly: Right. So, you need to go at least three times, but you would say, 'Is there more?' And, then they're talking and then you say, 'Is there more?' And, then you go, and by that point you're really getting the heart of the matter. You've got to go all the way and let them go through the obvious stuff and the cliché stuff. And, then at the third, 'Is there more,' then you'll hear the real story. Russ Roberts: You have a rule of three in conversation. When you ask someone for a reason for why they did something, ask them again to go deeper. Does that work, in your experience? Kevin Kelly: Yes, it does. It does. Particularly if there's an uncomfortable conversation to be had, which is another piece of my advice is that you kind of gauge your own personal growth by how many uncomfortable--to get back to this discomfort--conversations you're willing to have. But, part of that is to--yes, if you are willing to be patient and hear them out and encourage them, sometimes it takes that third try. Which is related to another piece of advice, which is: you're--if you're researching something, you often need to go down to the seventh level of the footnote to the footnote or the person who doesn't know, who may know, in order to get an answer to something. And, there is this sort of a sense of this kind of follow-through, because the superficial, easy answers are not really going to work, but you need to be patient. And, patience is another theme of the book, in that we overestimate what can be done in a couple of years and underestimate what can be done in a decade or doing it for 10 years. And so, I have kind of a long view--there's a long view that kind of suffuses the book, which is, like, if you take a long view for investments, for friendships, for relationships, you can get a lot done. If you're trying to get rich quick, it's not going to work. |

| 12:03 | Russ Roberts: Let's talk about the nature of advice, generally. I reflected on this recently on the program: It's not clear people can take advice even when they ask for it. Sometimes I think people ask for advice just because they want to be reassured. They don't really want your opinion. And, this kind of book is a book that I would consider giving to my children. Of my four children, I'm pretty confident two wouldn't read it at all. They would just put it down. One of them likes this kind of book. One, I think might be open to this kind of book. But, in general, if I pass on wisdom to you, especially if you're younger--and I think you and I are about the same age--but if I pass on wisdom to you, can you accept it without having learned it, quote, "the hard way"--by messing up and seeing the consequences? Can we accept advice of this nature, really? Kevin Kelly: So, I think you're onto something. So, what's the metaphor I want to imagine here? I want to imagine that, in a certain sense, the advice works best not when you're introducing an entirely new concept. Because, I think that is very hard to convey this entire amount of wisdom in this little sentence. But, it works best when you have already kind of learned the lesson but haven't articulated it yet and don't have a way to handle it. You might not have even processed it. You may be leaning in that direction, and this is something that comes along and crystallizes it, or coats it, or gives you a handle, or in some ways activates it. You're not actually learning it from this little tweet. You're actually having it soldered onto your brain in some ways, the thought that was emerging already, and there it is--it's kind of like: 'Okay, I get it now, it's been said this way.' So yeah: I think it is hard to change someone's mind by hearing one of these little sentences. Occasionally that might work where, 'Oh yeah. Aha, I hadn't thought about that. That's good.' But, I think it's more along the lines of distilling complicated things and giving it a little bit of a handle to it so that you can grab it when you need it. Russ Roberts: Yeah, I mentioned recently the book, The Heart Aroused, by David Whyte. And, what that book is--it's W-H-Y-T-E--it's a lovely book; he takes a number of poems and he distills lessons from those poems for business life, but mostly, as well, everyday life, human personal life. And, one of those is--I think it might be a story, not a poem. But, one of the lessons is: 'Hold your anger for a day.' And, that's a phenomenal piece of advice. And, it's really hard to keep. Because, when you're mad, when you're angry about something, you've lost some control. And, the idea of holding your anger for a day is not easy. In his book, he tells you a lengthy story of someone who had these kind of aphorisms that he held onto, and one of them was, 'Hold your anger for a day.' And, it seemed to save him for making a horrible life mistake. Because he misunderstood something, and when he woke up the next day, he actually found out what was actually going on. I think, 'Hold your anger for a day,' is even better because--not just to keep you from making mistakes due to incomplete information, but also simply you realize: so much else going on. It wasn't me, it wasn't them, it was--and I think stories are very powerful. I think experience--obviously this is one way we can embed those lessons into our lives. But, hearing a story is a way we imagine an experience that we could profit and learn from. Say something about that. Kevin Kelly: Well, you're absolutely right. The potency of a story is beyond belief. And, our lives are just stories. And, civilization is kind of a story. And, it's something that people like David and others are very good at, and I'm not as good at it. So, there's plenty of books, people telling stories and anecdotes and stuff. What I'm good about is this telegraphic little proverbs, this distillation. And, that's where I got the joy of--I got the joy out of taking out the stories and trying to compress the story into a single tweet. And, I think that--back to your kids--my kids probably aren't going to read this book, either, because books don't register in their vernacular. But--don't tell Penguin--but the value of this is that it can be tweeted. And I know that these things travel around tweets, and one of your kids may come across them, and tweeting just this little thing by itself; and they'll say, 'Oh, I get that. That's good.' And so, this is how it's going to travel, really. It's going to travel around as little corpuscles, little bullets. And they may pierce somebody at the right time. And they can work a little magic in that sense, because they're naked, in that way. They're not a big story that you have to go through. |

| 18:08 | Russ Roberts: One of your lines is, 'Don't measure your life with someone else's ruler. Don't measure life with someone else's ruler.' And I thought, that's really good advice. And, there's a separate piece of advice you left out, which is, 'Figure out what ruler you want to use. Create your own standards.' But I'm curious, in your own life, was that a problem you dealt with? If I'm correct, you did not go to college--which is definitely other people's ruler. I mean, come on: you didn't go to college. What were you thinking? And so, you've been a bit of a--not a 'bit,'--you're a maverick. You are a contrarian, so probably not measuring yourself with someone else's ruler came relatively easy for you. But, were there times when you regretted, when you regret now looking back on it, that you made decisions that other people expected of you and yet you wish you'd done something different? Kevin Kelly: I haven't had very many of those regrets. And, I think I was temperamentally inclined in this direction. My favorite author in high school was Henry David Thoreau and Walden, and marching to a different drum--that--he was my hero. And, this is in suburban northern New Jersey, which was very parochial. And, other than people like that I didn't have many models for--this was the beginning of the hippies where tuning out, dropping out, was still pretty revolutionary. And, I'm not really a revolutionary. I may be a little contrarian, but I'm not seizing the ramparts and wanting to tear down things. So, I think I was temperamentally inclined that way, but I resigned myself to a life of poverty and not having very much money, but having a lot of time. And, I don't know where I got that idea that that was a better bargain, was to have control over my time rather than having money. But, it was only later on, late in life, that I kind of realized that that was a kind of wealth to have control of your time versus money; and that I actually had been wealthy all my life because of that. But, that was not what I thought I was signing up for. So, my life is not, it wasn't kind of like I had a deliberate idea: I'm going to be the rebel on the outside. I just didn't care that much about what my career looked like, because I was sort of signing: I'm not going to have a career. I'm going to do things, projects. I was a project-oriented person. I was going to do projects as they came along. And, I would survive. And, I had been living in Asia and I knew how little I could live on, and that was the liberation that I saw, was saying: 'Look, I don't need very much money to be happy, so I'm going to do these things.' And, the fact that they succeeded was a bonus. |

| 21:26 | Russ Roberts: I think a lot of these insights are about self-control. And, I think the other issue we didn't mention when we were talking about the mantra or the habituation of good habits is trying to gain some self-control, which is part of, I think, growing up. One example is the line you have: 'Don't ruin an apology with an excuse.' And, if you're like most people--certainly when I was younger, and still today to some extent--when you apologize for something, you have this incredible impulse, a compulsive need, to add an excuse, an explanation, a rationalization. Which of course ruins--as you say--ruins the apology. Because it says, 'I'm not really regretful because it really wasn't my fault.' Kevin Kelly: Yeah, right, right. Yeah, no, I have to say that I was late in coming to that. That was something that I wish I had known earlier--because, lots of the stuff, I came late to these understandings and that was something that I actually had to see people do. And one of my mentors, one of my heroes, is Stewart Brand, and I watched him do that, and I saw the power of that. So, that came from seeing someone else execute that. And, there were other things that I learned from Stewart to that I admired tremendously, and with seeing someone do that and behave like that and seeing, 'Oh my gosh, that was incredibly powerful,' I want to do that. I see the wisdom in that, I'm going to work towards that. And so, in that case, it was something, again, that I wished I'd known when I was younger because it would have been much more powerful. Russ Roberts: So, if you want to become a better person, a more successful person, more grown up, you can read a book like this and you can adopt as many of these ideas that you at least agree with, as you can and try to commit some of them to memory, perhaps to use in situations where you might have some impulse control. |

| 23:29 | Russ Roberts: But, you also raised now the role of a mentor or a person one admires. And, besides Stewart Brand, how is that an important part of your life? Has it been an important part of your life, identifying those people? I think it's easy--sometimes--to look at people who we admire who are better than we think we are and say, 'Oh, well, I could never do that. So-and-so's great, but that's too much for me. I wish I could, but I can't.' And, yet, I think the power of aspiring, of trying to achieve, what I would call in Yiddish menschlichkeit--meaning doing the right thing, being the person who can be relied on, the person whose word you can trust, who stays calm in a crisis, and who doesn't always put themselves first--that's what we as often want to aspire to. And yet, again--we're talking about mantras: Talk is cheap. It's easy to say that. It's hard to implement. Any thoughts on that? Kevin Kelly: Yeah. I wish I had more practical advice about how to pick a mentor--or get a mentor to pick you. There is a piece of advice which I did not put in it because I never found a way to say it better than the person who said it originally--which was Tim Ferris--which is that you are kind of [?] to behave the average of the five closest people around you. And so, I did try to surround myself with people that I respected--at the very least--and maybe even admired, if I could. And, the kind of people that I admired were not--were people who did achieve things, but who were, to me, attractive people while they were achieving that. They were people that I liked to be around and I felt were making me better for being around them and for trying to be like them. And so, it wasn't just Stewart. There were other people in my circle that, the more I got to know them, the more I respected them. Which is, for me, the real telltale. Because a lot of times you meet your heroes and it's like, 'Oh my gosh, they're not who I thought they were. And, I'm not that--the more I know them, the less I respect them.' I wanted something where every time I met them, I was like, 'Oh my gosh, I respect you more.' And, there are very few people like that. But, if you have one or two in your life, that's a lot. And, I might have been lucky to have that association, but I don't know. There are--I have to say there are a lot of people--actually, there are enough people like that around in the world that if you wanted to, you could learn from. So, I never--again, this is me talking at the age of 70--I think while this was happened, I wasn't as conscious about it. I wasn't as deliberate. I wasn't going about saying, 'I need to find a mentor, someone I can work with and learn from,' not of what they know but how they behave. That never occurred to me. I wish someone had told me that I should be doing this deliberately; I should be paying attention to it. But, it happened very organically, inadvertently in some ways, although I did make a very early decision--and I don't know where it came from--that I was only going to work with people that I really respected. That was clear. It was like, 'If I am not enjoying this, if I am not impressed or respecting these people, I am not going to work with them.' It's, like: I'm just not. I may try to learn from them--again, going back to the original question about someone I disagree with--but I'm not going to work with them. |

| 28:21 | Russ Roberts: Before we leave the book--I want to talk about some other things in a minute--but before we leave the book, I want to read one more because it's a secret you've revealed. Here it goes: 'Ignore what others may be thinking of you because they aren't thinking of you.' Very hard to realize that. Really hard. Kevin Kelly: It is. It is very hard to realize, but it's true. And, you're making a good point that a lot of these things are very easy to say and much harder to actually put into practice. And, these are reminders, and they're not magic pills that you take and then you suddenly do them because you've said them, incantations and stuff. They are habits that you have to have. But I think--my premise is that if you have a little saying that will help you begin that habit, that it's better than not having it--just to try to make the habit out of whole cloth. So, this is a little bit of a mantra, of a reminder, that you can say that might help you with that habit. I know we want to go off the book, but I have one thing which is my favorite piece of advice. Russ Roberts: Please. Kevin Kelly: And, this is sort of--for me, the thing that I really took--only in the last 10 years did I kind of realize this, and it would've helped me earlier--and that is: 'Don't aim to be the best. Aim to be the only.' And, very allied to that is this idea of--my career advice to young people is: Try to work in somewhere where there's not the name for what it is that you are doing, that you find it very hard to describe to anybody else what it is that you're up to. That's an uncomfortable place to be, but it's one of the most powerful places to be. And' 'Don't be the best, be the only,' came from my realization of being at Wired where I was trying to make assignments. I'd have ideas, and I thought they were good ideas, and I'd tried to get somebody to write them. I was giving away ideas--good ideas. And often--very often--I couldn't get anybody to take these ideas. It was like, 'No, that's not a good idea. I don't want to do that. No, who's interested?' Whatever. So, I would put it away, and I'd say, 'Oh, obviously, it's not good.' But, it would come back a year later. And, it's like, 'No, I think that's a good idea. I'll try to get someone to write it.' I couldn't get anybody to write it. And then, maybe another time, and then probably the third time around, I would have this realization, 'Hmm. This is something I have to do. I'm the only one who thinks this is a good idea. I'm going to do it.' And, that would be one of the best things I would write. And, I got in the habit, then, of trying to give away my ideas: Whenever I'm working on something, I'm talking about it, and I'm hoping that someone else will steal this idea. Because if they steal that idea and do it, that means that I didn't have to do it. Because for most young people, if they can find a job--and I was part of this--if they can find a job that I love and that I'm good at, and it pays well--that people find valuable--that's like the Holy Trinity. That's like, 'Okay, that Venn diagram is, like, that's where you're aiming for--to do something that you love. That you're really good at and you get paid for.' But, once you arrived there, there was another level--I realized a whole 'nother level. And, that other level was: Can anybody else do this? And so, this was a way to say No to things. So, there was a fourth thing, is: 'Yeah, I could do this. It would be fun to do, and I'd enjoy it, and I'd get paid to do it.' But, someone else could do this. I only want to do the things that nobody else can do--that I love to do and would be good at and get paid at. And, when I'm doing that, it's easy because I'm not looking over my shoulder. There's no competition. There's no competition, and I can take my time doing it because I've already tried to give this idea away. But, the downside--to your point--is this is an incredibly high bar, and most people will take their entire life to kind of figure out what it is that they're really good at that no one else is. This is not something that most young people know in the beginning. There are a few prodigies who have a very clear idea of what they really excel at and are special about, but most people don't. And, your life is going to take many, many detours and stuff in trying to uncover what that is. But, what I wish I'd known is: that's what we're aiming for. And that, to me is sort of what the book is about. Russ Roberts: Yeah. It's a great example of--'Don't be the best, be the only,' is a good example of what might not be good advice in certain situations for certain people, but for others it's life-changing. For me, I think about it as: when you're making a decision and you have a couple of choices, you should consider the value of uniqueness, which often isn't what people, I think, think about. We think about what's going to be fun, what's going to be comfortable. And, I think the whole idea of a calling--the idea of a calling--is: this was meant for you. This is not something that anyone else can do, or it's something that you could do especially well. My dad used to have a saying, which--same advice. He said, 'You should be able to do something better than anyone else in the world.' And, for him it was drop-kicking--the art of kicking a football through a field goal, through the goalposts--an art that had been lost and replaced by place kicking. And, he and I spent a lot of time together in the backyard, drop-kicking. And, I'm not sure that was good advice. I don't think drop-kicking is necessarily what you should devote yourself to or some similar arcane art. But, the idea that you should look for something where you can create, can--excuse me, where you can contribute something unique or close to it, is very powerful. And, again, I don't know if it's practical advice, but it's worth thinking about. Kevin Kelly: I think it is practical in the sense that it's a direction, it's not a destiny, it's just as you said, if you have choices that seem to be very, very equal, similar, choose the one that goes in that direction. And, I think it's practical in a sense that as you go in that direction, it gets easier and more fulfilling and--what's the word I want? You can be paralyzed by having to make decisions when you're not particularly in it and not knowing. And, I think even having a suggestion about a general trend can ease some of that paralysis about making decisions. |

| 36:34 | Russ Roberts: Of course, the other thing--and your life is in many ways an example of this--probably in many, many cases, the twists and turns your life has taken. It's not true for everyone. But, it's true for me, and I'm pretty confident it's true for you--you'll let me know. But, what's true is that some of the best things in my life happened unexpectedly. They were serendipitous. And, I think a lot of what makes life thrilling is that serendipity; but it's also not just thrilling--the surprise thing that turns out--but it's also things you wouldn't have found otherwise, not just that they're a surprise. You wouldn't have come across them. And so, many projects that I've done had unexpected consequences. This is one of them, EconTalk. It ended up in a very different direction than where it started. And, I think its impact is very different than what I expected and planned. And, in your case, you produced a crazy, fat book of photographs from Asia that probably cost you a fortune to get those published; and you traveled to take those photographs. And, in some sense, I assume it's not a bestseller, I'm just guessing. It's a labor of love, but I assume it's one of the best things you've ever done with your life--is to create that book. We shared a stage a while ago, a long time--it seems forever ago--in San Francisco, talking about some creative projects that people were doing. I was talking about my animated poem. You were talking about those photographs. And, most people have never heard of them, don't know you did that, I assume. So, talk about how that came out. Are you glad? Kevin Kelly: Well, one thing I would say about--so this is for the listeners--this is a humongous, three volume, oversized books that together weigh 30 pounds, and they're in a subcase. There's 9,000 photographs, which there's no other book like it in the world. I know; I've looked at them. Each of those 9,000 have a caption. And, the audience for the book was singular. It was me. I made the book for me, and there's nothing I enjoy more than sitting down late at night and going through this. Of course, I was there at all these photographs, but I just enjoy those images and what they're doing. So, I thought, 'Okay, I'm making it for me; and I'll make 4,000 others for anybody else who might be moved by them like me.' But, the audience for it was me--entirely, a hundred percent, me. So, I didn't care whether or not it really sold or not, as long as--I didn't want to lose money from it. But, I wasn't trying to maximize the number of sales. I was trying to get the book into the hands of people who would really appreciate it, which is why I gave a lot of the books away. But for me, it was just the creation of it and just my enjoyment of this artifact of a book. And so, it was 50 years in making. I started in 1972. And, it's a crazy thing: there's no economic reason for me to do it. It was closer to a compulsion. I mean, not just the book part, but the actual travel--of traveling to these 35 countries--to the end of the road. I mean, these were not taken in the capital cities. These were expeditions out into the back, which I was doing over 50 years. It was a compulsion, in a sense that: 'I can't stop. I've got to go to Uzbekistan up into the north, I haven't been there. Who knows what's there?' So, there was a little bit of a kind of a completist[?] thing of really having to do it. And, it was art. I describe it as art, as my contribution. And, what I'm certain of--no one else in the world could have done that or would have done that. Whether it's significant or means anything to other people, I don't know. But, for me, it was something that I felt I enjoyed doing it. It was a good idea. I thought it was fabulous. I'm a fan of it, and that's what counted. Russ Roberts: And, it's a great example of 'only.' Kevin Kelly: Yes, exactly. Russ Roberts: And, again, I don't think you should generalize and say, 'Do things you love no matter what the consequences: do them for you.' Because sometimes those are mistakes, and you go down. Kevin Kelly: Absolutely. And, I have a piece of advice in the book which says that: you've got to walk this thing of never giving up or else giving up when it's time to give up. And, then having your friends help you just discern what the difference is. Because you can't really get things done unless you give things up. Right? If something is not working, you've got to change. And, that's a skill, as well. Russ Roberts: Yeah. We talked about that with Annie Duke in her book Quitting [Quit--Econlib Ed.], it's a nice book. |

| 42:04 | Russ Roberts: Let's switch gears. I want to talk about the book you wrote in 2016, The Inevitable, that we talked about on the program. And, that was a very optimistic book about technology and the future that technology would usher in. And, the world's gone in a very unexpected direction, I think, since then. First, just reflect on that. Do you think about that book? Do you have optimism left? I'm sure you do, but talk about where you think you went wrong or you think you went right. Kevin Kelly: To answer the first question, I am more optimistic than I've ever been before. I think I have a natural mound[?] of sunny disposition. But, my optimism is actually not just my disposition is, is actually a carefully constructed perspective. It's a deliberate perspective. And, I am more optimistic now than before because I've been reading more history. And, a lot of my optimism comes from history--from what we have had in the past compared to what we have now and that momentum. So, in general, just to answer: Yes, I am more optimistic than ever. And, I think I stand behind most of the sentiments in The Inevitable. I stand by the larger controversial stance of--I became a very reluctant technological determinist. So, I allow myself to use the word 'inevitable' in the broad sense of that a lot of the changes like AI [artificial intelligence], AI ahead of us is inevitable. We're going to have it in some form or another, and no amount of regulations or anything else is really going to stop it. We have an incredible choice about the character of these AIs and who owns them and how they're regulated and stuff. But, the inevitable is that they're going to be coming, that they're going to be there. So, I haven't retreated from that. You are probably referring to the world changing as the sediment of people being upset, concerned about the way social media technology has infused everything and its role in our lives, which a lot of people found surprising and upsetting. And, there's also the capitalistic overlay of the monopolies of the current companies running them. I liked Ted Chiang's analysis of this, which is--I think this is true--that when a lot of people are complaining about technology, they're really complaining about capitalism. That's what they're unhappy with. It's not the technology itself, but this capitalistic, consumeristic aspect of this. And, that may be true. So, in short, I think that this is a correction--to put it in an economist's term--I think we're in a correction right now of an infatuation with Silicon Valley is the answer to everything. And, then saying, 'Well, actually, there is a lot of problems here.' And, now there's this, kind of like everybody--not everybody--but many people are down on it. And, I think that's just, again, what I talk about, the panic cycle in--I talk the tech panic cycle where this kind of getting frenzied about things that are mostly--what's interesting about the tech panic cycle is that most of the complaints are not about people. They're third person complaints. So, the artists--no artist is saying, 'I lost my job to AI.' They're concerned about some other artist who may lose their job. It's this third person thing--they're representing the interest of the third person. It's not like: I am unhappy with the technology, or it's messing me up, but it's missing up someone else, or maybe, or could be. I think that's something that we have to overcome. Russ Roberts: Well, you mentioned Ted Chiang, you're talking about the--he's a science fiction writer and very thoughtful commenter on technology generally. I'm not sure what--I would say, I would phrase his criticism of capitalism a little differently. Capitalism is really good at giving us what we want, and sometimes we don't like what we want and we wish that someone would help us be better. I was on a bus yesterday and I noticed everyone on their phone, almost everyone, and I wrote the following on Twitter, 'Riding on a bus and in many other situations, when it's inevitably led to the conclusion that one of the cruelest facts of human history is the reality being left alone to ponder one's own thoughts.' So, in 1950, if you were on a bus, or if you were at a party in 1950 or 1980, you might have a book with you. And, there were people who would take books everywhere and read, but most of the time you looked off in the distance or you talked to the person next to you. And now we don't do that anymore. We get on our phone; and we find it, most of us, relentlessly entertaining. And, the idea of being deprived of that entertainment makes us sad. And, we don't have it, we fidget and we're nervous, we're uneasy. And, that tweet was ironic. Some people missed it. Kevin Kelly: Yeah. So, I spent a lot of my time as an adult on buses--in Asia, primarily. I think I spent half of my adult life waiting for a bus to leave because in Asia they didn't leave until they were full. That was the schedule. And, I've seen tremendous numbers of people--this is all pre-phone, pre-Internet--on buses sitting there, as you say, staring out the window, not talking to anybody, staring out the window. There would be people on the streets sitting, squatting, doing nothing, absolutely nothing except whatever was in their head. I'm not sure that that sitting staring ahead is preferable to actually interacting with a phone and maybe someone else or an idea. I think it's better, and that's why I think people are doing it. I think they would prefer to interact with a phone rather than just stare ahead and daydream or whatever they were doing. Now, it is true that we need solitude to create things. We have to have some space to do it, and that could be crowded out. But, I think the fact that people are on a bus looking at the phone is not necessarily evidence that is better than people staring out the window. And so, I'd like to see data. So, the one thing I say about the future going is I want to have an evidence-based decision. I don't believe in the precautionary principle at all. I think it's a really misguided idea. I believe in the pro-actionary principle--that's my term for it--which is things where we're constantly evaluating things based on evidence of how they're actually being used and what actually happens, rather than some imaginary harm because of a third person. And so, I would say: Show me the evidence that this is actually worse. And, what I always say about technologies, a new technology, is we always have to say about new technology is: compared to what? Compared to what? So, phones compared to newspapers or books or staring out the windows. There's some new harm in social media: compared to what? Compared to Fox News? Compared to cable TV? Is there more information, is there more influence on the election from social media? Compared to what? Compared to cable TV or talk radio? So, let's see the evidence. |

| 51:38 | Russ Roberts: Well, Jonathan Haidt is working on a really interesting project, and he's, I think, as much as--maybe--my leaders in this area are Jonathan Haidt and Scott Alexander for worrying about whether they're wrong. And, their willingness to accept the possibility that the other side is right. So, Jonathan has created a remarkable document online--you can look at, we'll link to it--about the evidence that, starting around 2012, to my eye it looks more like 2010 sometimes--but somewhere around 2012, there's a rise in not-good things. Some of those things are hard to measure--anxiety, depression, sadness--but theyre are things that are fairly easy to measure--self-harm that results in a hospital visit, suicide. And, these are rising dramatically in the United States at least. And, he's got other country data in there. I haven't looked at them carefully yet, but certainly the United States-- Kevin Kelly: No, actually he doesn't. And, that's my criticism of it. And, we've had this conversation together, which is that it's primarily the United States and there's so many weird things about the United States that I think it's dangerous and misguided to decide on the policies of technological use based on how U.S. children are using it. First of all, it's really--again, compared to what? And so, is there bullying? Do they compare it to the bullying that happens in the hallways? No, they haven't. And, so-- Russ Roberts: Yeah, it's just a correlation, by the way. I want to be clear. It's not--I don't mean to suggest this is a proven case. It's not. Obviously there are many things happening in the United States between 2010 and 2023 that are not just the rise of cell phone access for young people. Kevin Kelly: So, I'm saying more evidence, more studies that this kind of looking at what technology does to us should be the default of what we do, and that should be the basis in terms of how we make policy. And, yes, it's going to slow down things--which is good. All right? I mean, that's fine. Social media is hardly 7,000 days old. It's still an infant. We don't really know what it's good for or we don't really even know what it's bad for yet. But, let's base it on the evidence rather than the imaginary harms of a third person. Russ Roberts: Well, I love that imaginary-harms-to-the-third-person thing. That's a deep truth and insight. |

| 54:26 | Russ Roberts: Now let's talk about ChatGPT [Chat Generative Pretrained Transformer] and AI generally. You wrote in 2017 an essay that I found quite compelling, still find compelling, and yet I'm wondering whether I'm overly optimistic. That essay made the argument that despite the fact that some really high-IQ [intelligence quotient] people are worried about AI evolving into something we don't control, that worry should not consume us. What was the argument and where do you stand now on that? Kevin Kelly: Yeah. In the past couple of years, there have been a hundred million dollars devoted and spent on what we call AI alignment, AI safety. A hundred million dollars of people who are very concerned about the possibility of AI going out of our control. And, the extreme position of that, is that in short form, the idea is very--what's the word I want? It's very appealing in a certain sense, which is that we can make an AI that could design an AI smarter than itself, which would then design an AI smarter than itself. And, that process would also start to increase in the speed and then in a very, very fast exponential time span we would have the sort of God-like thing that would not need us, that would displace us, that would not be aligned with our human values. And, that's sometimes called the Singularity. But, there's this idea of this sort of superhuman AI. And, in my investigations of that kind of logic and that argument, I could find, again, no evidence that that was happening. And so, I would say there's a greater than zero chance that that's possible. But--and there should be some amount of people who are dedicating their lives to make sure that it doesn't happen. I equate it to, like, asteroids: so, our entire civilization would be existentially extinguished with an asteroid impact. And so, there are a small group of people that should be funded and are being funded to make sure that we don't get hit by an asteroid. So, there is a greater than zero chance that could happen. But we're not--that's not really influencing our general day-to-day policy. We're not altering what we do based on the fact that we could be wiped out by an asteroid. And so, the same thing with the AI thing. Yeah, that's a possibility that could happen. And there should be some people--but it's not a real serious thing that should influence what AI we decide to use and how it should be regulated right now. And, the reason why it's not really worth worrying about in that capacity is for many reasons. But, let me just take one example, which is this idea that intelligence is a single dimension like decibel that's sort of increasing through AI. That is kind of a quiet in mouse and rats and kind of gets louder with us. And, then you have geniuses where it's really high, and then there's the AI, which is extremely high. Well, there's no evidence whatsoever that intelligence is a single dimensional--like, it's multidimensional. It's very, very graduated. It's a huge possibility space. And, we have no idea really what those ingredients in dimensions even are. And, to say that this intelligence could expand exponentially, there's no evidence of that, either. Of--so far, the output of whatever you want to call intelligence--first of all, we don't have any metric for it. And, secondly, whatever metrics we have are not increasing exponentially. They're hardly even increasing linearly right now. What's increasing exponential is the amount of resources or chips or CPUs [Central Processing Units] that you need to make that stuff. So, it's kind of actually reverse, where it's taking more and more and more compute to actually move the needle just a little bit. And so, this idea that there's sort of a runaway exponential growth of intelligence--again, it's possible that it could happen, but there's no evidence, too. So, what I say is that this vision of the super-human intelligence is mythical if not religious. It's a belief. It's not really based on evidence of what we know about either AI or the rest of the world. And so, it could happen. It's possible, but so unlikely that we really shouldn't spend much energy or have it as an influence in our deciding what we want to do as a society right now. |

| 1:00:02 | Russ Roberts: I'm going to give you my take on it. You can tell me if I understand it and if you agree or not. So, I've been in your camp for a long time. I interviewed Nick Bostrom on his book Superintelligence--which I found unconvincing. That was a doomsday book about the threat we're under. I mentioned to him that it was God-like: his view of artificial intelligence was very similar to medieval theologians. And, he was, not surprisingly, taken aback by that. But I think--so I'm in your camp, but I'm going to follow your first aphorism and I'll tell you why I'm more, why I'm a little more worried than I was a while back. There was an essay in the last couple of weeks by Erik Hoel, the neuroscientist, who I've interviewed, and I've a huge amount of respect for him. He's very thoughtful. And, he wrote a really alarmist piece about ChatGPT. Basically it was not ChatGPT--Bing's version of it. Where Bing came, Microsoft's version accounts, said, "I am Bing, I am Evil." That was the name of Erik's essay. He and I may talk about in this program at some point. But, the theme of that was that we are the equivalent of a primitive pre-human species inviting homo sapiens into the campfire to see what we could learn from him. And, then of course, we probably ended up--homo sapiens--probably ended up killing off most of our pre-human competitors. And, here we are, bringing them into the campfire. Like: What are we thinking? It's a Trojan horse. When we're bringing it in, knowing it's dangerous. And, I think besides the kind of things that Bing went off and said slightly, I think disagreeing with your claim about it's not improving. This thing, ChatGPT in, like a month, it's passed these medical exams, and it's passed the bar, and it's passed the--da, da, da, da. And, obviously it's getting smarter and smarter already. And, I think my take on it now--this is the background, I apologize for the long intro--but my take on it is that there's a confusion about what intelligence is. Not your insight, which is correct, that it's multidimensional. But, I think there's a leap of faith or presumption that a machine that mimics something that we do must therefore be sentient as we are. And, I'm going to give you an example. I read an essay by Stephen Wolfram this week about how ChatGPT actually works, which is that it looks for the next word that is typically used in sentences that are on this topic. And, it's got a huge--billions of words and sentences--to choose from. So, it's not really thinking. It's just looking for the next word. \ And, then what Stephen points out is that, well, actually it works even better if you don't always choose the most likely next word from the data and it becomes more lifelike. In a long essay, I read a good chunk of, he tries to explain the algorithm that generates these essays that seem rather well done--even though they're not great, they're pretty good. And, when you think about it that way, if that's actually what it's doing, I guess you could have two thoughts. One thought is, well, isn't that what we do when we write? I mean, I look for the next word and sometimes I reject the obvious one because I want it to be more interesting. So, ChatGPT is doing what I do. And it's like me. But I don't think it's sentient. And, I don't understand the argument of the--Bostrom has said all, who argue that it's going to have, quote, "a mind of its own." I don't believe that. I don't see the evidence for it. Am I missing something? Do you think I'm right? Kevin Kelly: No, you're right. I think Stephen said it in his piece, Stephen Wolfram's piece that you're referring to, that what it means is that where we changing your mind is to say that actually writing a sentence or writing an essay is actually a more primitive and more elemental thing than we thought. It's sort of like, it's not as a big of achievement as we imagined. Just like playing chess turned out to be a more mechanical thing than we thought. And, my point about the image generators was that we have always reserved creativity as something that you had to be conscious first to be creative. And, it turns out, no, you don't need consciousness at all. You can make creativity, at least a lowercase version of it, in a machine and a system. So, creativity is not the high bar that we thought. It's actually low bar. And, the next surprise that we're going to have is emotion. A lot of people think that, 'Oh, we won't have emotional machines or artificial emotion until we have consciousness and awareness.' No, no, no. Emotion is very elemental. Look at your pets. Look at any animals. They aren't sentient very much and they have emotions. And so, emotions, again, is a lower down, more mechanical thing. And, writing turns out to be not as sophisticated an achievement as we thought it was. And, this auto-complete--which is what these things are, they're just going to complete the next thing--turns out to be, yeah, you can do that; and we'll look back and say, 'Oh, obviously.' I mean it's like translating things: that turns out to be not a big higher level chore either. It's just translation. And so, our mutual friend, Jaron Lanier is concerned about this demoting humanness. And, there is a sense in which we are, because we're saying lots of things that we have done and we have identified as very human things turn out to be maybe very human, but not necessarily very elevated. They turn out to be things that we can actually replicate. This is elementary stuff, and it's not anywhere near consciousness and it's not anywhere near self-awareness. And, we can't right now do deduction and logical reasoning and mathematical proofs. We might someday be able to do those things but it's important to remember that we haven't done those. We've only been able to synthesize a few of the cognition types that are in this very complicated suite of what human intelligence is. And, the point that I make about these AIs--always plural, because there's going to be thousands of them all engineered in slightly different combinations to do different kinds of things. I've been using the AI image generators for a year now, every day making AI art. And, they have different personalities. There's Midjourney for this kind of thing, and there's Dolly for that thing. And, they're engineered that way to be more arty or more photographic. And, we will work with them to do that. But, in every case, they're alien. The way that they do things because they're running on a different substrate than what we run on, their creativity is legitimate. It's really creative, but it's off. It's different than us. It's different, it's alien. They have a slightly different alien way of doing it. And that is its true benefit. That's the main reason why they're valuable, is because they're not doing it like humans. When they start to do proofs, they're not going to do it like a human does it. When we have consciousness, it'll be slightly different. It'll be akimbo from us, because it's running on a whole different thing. It's different latencies in all different ways in which it's going to be different. And, that is its benefit. And so, we are going to work with them and prove things that we could not prove by ourselves. We'll make things that we can't make by ourselves. There will be lots of things that they do that we don't want to do. And, we realize, 'Well, we weren't the only ones who could do it': Be the only, don't be the best. And so, the thing is, is that this idea that they're kind of like superhuman, yes, there are going to be extra-human or whatever the other word is: exo-human. They are not going to be like us. And, they can't be exactly like us, no matter how complicated they become. And, that is what we're producing. So, the way I think of it, I think the best metaphor for them is to think of them as artificial aliens. They're aliens from another planet, many different planets. They're maybe conscious, maybe sentient, some of them are smart as animals and some of them are smart as monkeys and some are smart as grasshoppers. And, they all have their functions, but they're all artificial aliens. And, we will work with them like Spock and Kirk, as a team. |

| 1:09:45 | Russ Roberts: But, you don't think that there's a risk of them, quote, "having a mind of their own"? So, for example-- Kevin Kelly: No, they could have a mind of their own, for sure. If we didn't like what they're doing, we unplug them. Russ Roberts: Yeah, that's what I've always said. But, the other side doesn't seem to think that's--they think they can--I don't know. Why doesn't that work? Kevin Kelly: I'm so sorry. It's very easy to unplug things. Part of their argument is that it happened so fast that we don't have a chance. And, that's this exponential thing that I don't see happening. We will have time and there are so many things that have to be precursors to this--I mean, 'this' being a takeover where they kill us--there are so many things that have to happen before that would happen that would be very evident that that's where it was going. This idea that it's going to happen in an instant or behind our backs so we're not going to know about it--I don't know, it's very strange. Russ Roberts: Nick Bostrom's argument, which I found very uncompelling, was that they'll know so much about us that they'll know how to fool us into not unplugging it. I think that's, again, the linear intelligence thing. It just assumes there's a number that'll[?] be a 58, we're only a 12 and therefore they'll be able to convince us of that. This doesn't work for me. But, there is an interesting related point that comes back to the Ted Chiang criticism, and it's part of Erik Hoel's point, which is: it is a little--some people are uneasy with the idea that the profit motive is what is pushing these technologies forward. Maybe there should be some alternative way this develops. Do you have thoughts on that? Do you think we should regulate it? Should we take it out of the corporate world and into some other world, etc.? Kevin Kelly: Yeah--so, who owns these things are a big thing. I'm of the opinion that network effects will be at work in AI. That there will be certain AIs, a certain kind of AIs, that will benefit from more people using them. And, the more people use them, the better they'll get. They'll have that learning, which means you have network effects. And so, you will see these very large AIs explode through use; and they'll have kind of a natural monopoly--that it becomes a service. I've long talked about this, that a lot of the AI will be a service which you'll buy as much AI as you want to use, be delivered like a utility. And so, in the sense that it might become a utility, I think there could be some regulation or maybe even some utility-like public ownership of it, and public control, and public accountability for those kinds of AIs. There'll be a lot of other AIs that are just going to be running standalone and you're going to purchase and they'll just be custom-made or boutique, whatever it is. So, again there's plurals--AIs and[?] plurals, but there will be one version of it that could be AI service and that might be so big and so ubiquitous that we might want to treat it as a commons. And, there also might be planetary scale stuff happening. And, the one place where I do admit that there could be a super-organism-level planetary-like intelligence, is at the scale where all these things are connected together and there are emergent thoughts that happen at a scale that is very hard for us to even notice. And so, in that sense it will pay us to pay attention to how that develops at the planetary scale. And so, that certainly should have some kind of--what's the word I want?--management that's beyond the nation-state, beyond national level, because it's a planetary thing. |

| 1:14:30 | Russ Roberts: Some people have criticized the current ChatGPT versions that they're relying on using intellectual property that is not theirs. They don't own it. And, certainly in the art world, that's a related issue. A Dolly or Midjourney or riffing on art that is owned by people, it was created by people--I find it fascinating. I think it's very related to your theme of The Inevitable. I think it would be really hard to outlaw ChatGPT because of those kind of concerns--efforts like ChatGPT. I don't think we can put the genie back in the bottle. I don't think human beings are going to say, 'I don't think we should work on this. It's too dangerous.' Human creativity, I don't think there's any--outside of a totalitarian state of--and even in a totalitarian state, I'm not sure it works. But, maybe in a modern totalitarian state we haven't seen yet. It's really hard to stop people from thinking up new things that they want to think about. Kevin Kelly: Yeah, I think it's a little bit of a distraction. And, I think there will be some people who will try to make ethical training sets where all the artists opt in, but I don't think it's really going to make a significant difference. And, the other thing that's happening in AI is almost all the AI that we have so far has been on neural nets, which require billions and billions of data points to train. We haven't been very good at trying to do AIs trained on a few training sets--which is how humans work. So, a toddler will learn the difference between a cat and dog with just 12 examples. Well, we'll get to the point where we'll be able to do that. We'll have training sets that are very, very small. And, my prediction is at that point, every artist will be clamoring to be included into the training set to have influence, to be counted among the most important artists in the world because you will only have a few of them. So, I think that part of it is a distraction. However, there is one thing that ChatGPT has uncovered that I think is actually very important, and very hard to figure out, and is going to open up, I think, a multi-decade-long project to figure out. And, that is this issue of whether you can trust the answers or believe the answers. And, that's a really, really fundamental problem that spills over to the general question of how we believe anybody and what we take as evidence of something being true and how we think we know things. So, I think there's a lot wrapped into that--that, this is sort of uncovering a little crack in the dike, and as we try and solve this for ChatGPT, we're going to actually be solving bigger problems that we had that we haven't really tried to address yet. And so, I'm very excited by this kind of revealing the emperor has no clothes on because it is a very difficult question. And, some of the things I've seen--I mean, I just used it tonight because we had a medical report in our family from a doctor, MRI [magnetic resonance imaging], very hard--I just fed it into ChatGPT. I said: 'Summarize this in plain English,' and it did a remarkable job. Now, there might have been things it said wrong. I don't know. So, the question is, can I fully trust this? Because I have seen examples of people giving it multiplication problems that it was giving incorrect answers to. At the same time, there's other things where it does an incredibly powerful job that is very believable and very trustworthy, and how are we going to tell the difference? And so, I'm very excited by this because I think that this little weakness in the armor is actually something very, very profound that will require us to make an infrastructure that has the ability to distinguish about what's trustworthy and what's not, what's true and what's not. Anyway, so that's my rant. Russ Roberts: No, I think that's a fantastic point. And, Ted Chiang wrote a very thoughtful essay recently trying to explain why it makes mistakes, ChatGPT. How could it attribute a book to me that I didn't write? Or a job--I'd be working somewhere I've never worked at? How could it make that kind of mistake? And, he wrote a very interesting piece--we'll put a link up to it. |

| 1:19:44 | Russ Roberts: But, I think, to come back to your point about expertise--or epistemology is what it really is: how do we know what we know? Sam Altman, who is the head of OpenAI that's created this, said--he's very apologetic that occasionally ChatGPT says things that are racist or biased or whatever. And, I'm thinking: Well, of course it's racist and biased. It's based on human beings that have been writing for the last, not since 20--I think after 2020 it stops. It's like, 'We didn't fix that yet.' So, it's not going to get that much better when it takes in modern data. And so, isn't the answer to this--instead of saying, 'Well, we just have to eliminate that,' isn't the answer. We have to be better consumers of truth and recognize that not everything we read on the Internet is true. That's a bad standard. The better standard is to be aware that you have to consume this thoughtfully. I'm going to come back to translation. Now I'm living in Israel, I'm living in Hebrew a lot. I'm trying to improve my conversational Hebrew. I'm using Google Translate all the time. Google Translate is wonderful for many things, but of course there are many things that can't do it all, and it's really dangerous. And, if you take a sophisticated--we deal with this all the time here at our college. We write something in English and we ask someone, 'Let's get it translated.' And, then after it's translated, people will say, 'Yeah, but is it in real Hebrew or just sort of translated Hebrew?' And, you start thinking about that. What does that mean? What do you mean? What is there besides translated Hebrew? The answer is because Hebrew is not English and it's not a one-to-one correspondence. And, there are sophisticated concepts and trains of logic that don't work in a foreign language, unless they're really thoughtfully--it requires someone who's really fluent in both. And, even a person fluent in both, there's certain concepts that they're not quite the same. And, that's a reality. It's not like, 'Oh, we have to get a better translator.' That's not the answer. The answer is you might have to say things differently. And so, I think your point, which I love, is that the way I interpret it is: This forces us to think about what is true. And, it's not, 'Oh, we'll just have to get a better--there will be a website and you just say: Is this true, this MRI ChatGPT thing? Did they get it right?' The answer is--'Oh, well, who is going to design that?' And, the answer is, it's going to be imperfect. You are going to have to live with that. So, hard. Kevin Kelly: So, I think you're right, and this is why I think it's profound because I think it will force us to deal with how we can believe other humans in what they say. But, I think there is something maybe even more profound behind what I'm trying to say, which is that yes, so we've trained this AI on human experience; and human experience is fundamentally flawed. And so, we've given it the ethics of humans and we're saying--but here's the thing, is that we are going to demand that the AIs be better than humans. That's what we're demanding. We're just saying you can't be as racist, you can't be as sexist as humans are, and that's okay. We can actually do that because the ethics and morality are just code and we can put that code in. The challenge is we don't know what better-than-human looks like. Russ Roberts: Yeah, it's going to be-- Kevin Kelly: That's our challenge. It's like, 'Well, what does it look like to be non-racist, to be non-sexist, to be a better ethically than humans?' And, that's our challenge, and that's what we're going to be working on because if we can do it, then we can code it in, but we don't really know that. And so, that's the exciting challenge of groing up, is to make our AI robots better than us because in the end, they're going to make us better humans. |

| 1:23:59 | Russ Roberts: Well, I'd love to end there, Kevin. I have a natural impulse to end there, but I can't because I'm reminded of this essay by David Chalmers on how philosophy hasn't answered any of the biggest questions, and how it's not a progressive--in the sense of making progress--discipline, and it's a failure. And, I'm thinking, 'No, it's not.' The fact that the questions of how to live, what is a life well lived, the fact that that is not well specified is not a failure. It is the nature of the being and the idea that we could be better humans, more ethical than we are, or have better ethics than humans, that's like asking the barber who only cuts the hair of people who don't cut their own hair to figure out what he's supposed to do. How are we get outside ourselves to figure out what would be better for us? I don't see that working. Kevin Kelly: Well, here's how we're going to do it. We're going to make aliens. We're going to make artificial aliens, and we're going to create these other beings, and they're going to help us go beyond ourselves. We can't do it by ourselves. This is the paradox, is that by ourselves we would be unable to make a better-than-humans, but we can with the help of these artificial aliens that we create. That's my premise, that's my prediction. I'm sticking with it. And, that's why I'm excited. Russ Roberts: My guest today has been Kevin Kelly, who will be back here in about six months after he writes the essay that convinces me of that. Kevin, thanks for being part of EconTalk. Kevin Kelly: Russ, you're just fabulous. I just enjoyed this conversation so much, as usual, and I appreciate your playing with me and inviting me on here. And, thank you for your enthusiasm for the book as well. Russ Roberts: Take care. |

Photographer, author, and visionary Kevin Kelly talks about his book Excellent Advice for Living with EconTalk's Russ Roberts. His advice includes how to have a deep conversation, why it's better to control time than money--and whether, in the end, we should give advice in the first place. Other topics of discussion include the right object of our aspirations, the reason for optimism when it comes to technology, and why Kelly is not worried about AI.

Photographer, author, and visionary Kevin Kelly talks about his book Excellent Advice for Living with EconTalk's Russ Roberts. His advice includes how to have a deep conversation, why it's better to control time than money--and whether, in the end, we should give advice in the first place. Other topics of discussion include the right object of our aspirations, the reason for optimism when it comes to technology, and why Kelly is not worried about AI.

READER COMMENTS

Mike Krautstrunk

Mar 27 2023 at 11:15am

I hope the “additional ideas” section will include a link to a site Mr Kelly mentioned. He called it “now upstart” and indicated that it’s some sort of news aggregator, but I can find no such site. Thanks.

P.S. Mr Kelly’s comment comes at the 3:06 mark.

Marion

Mar 27 2023 at 8:46pm

Kelly clarified on Twitter, the website he was referring to was Upstract.

D

Mar 27 2023 at 1:36pm

Why can’t we simply unplug facebook? People can’t even unplug their phone.

Mitchell Dowell

Apr 18 2023 at 6:26am

This does seem foolish. I’m not in the camp that AI will take over the world, but I think it is plausible. AI could (and probably has) created its own language. How could we know to unplug it if we don’t know what it’s saying? Not to mention, this assumes the controller of the AI wants to unplug it.

netjunki

Mar 27 2023 at 1:52pm

I really enjoyed this episode and looking forward to either reading the book or listening to it as an audio book. I’ve definitely had an “only” approach in my career where possible, though I specialized into something which other people do I bring some interesting additional skills to the job since my background is abnormal. Also always nice to hear about folks who didn’t go to college and things worked out. When I was younger I was constantly unsure about my choice to leave college after 2 years and drop back into my job as a programmer at a university. But in the relatively long run (27 years later) things seem to have mostly worked out.

Kelly Malizia

Mar 27 2023 at 2:25pm

Pets aren’t sentient?

while the coming singularity is questionable, AI does pose a risk of changing the meaning of words, significantly. For instance: if AI describes something as elegant (which it did the other day, just chose that word itself [maybe because it was the next most chosen word, as Roberts suggested]), it is offering an “opinion” that will be repeated. It is making judgements, in a way, even if only through common word selection.

Dan

Mar 31 2023 at 8:55am

That was an odd statement about pets. Pets are show incredible sentience

Dan A.

Mar 27 2023 at 6:00pm

Thank you Russ and Kevin for a deep conversation on this issue – the notion that AIs are functionally aliens that might elevate humans out of some of our own feedback loops is an intriguing insight.

Regarding whether AIs may succeed humans, I believe the salient point isn’t whether they will engage us in a form of warfare, or physically prevent us from unplugging them, but rather whether we _would_ unplug them. We have not unplugged Twitter, for example, despite its dubious contributions to societal well-being (let alone its current state of management disarray).

Far more likely than a “SkyNet scenario”, wherein we are engaged in conflict with autonomous cloud-powered robots, is a continued degregation of human agency and purpose. We are only beginning to contemplate what a world looks like where much of human input simply isn’t needed. What pathways through life are possible when our traditional course of schooling, standardized tests, higher education and productive employment are no longer relevant?

We should all have learned throughout the Covid experience that a large portion of the value of in-person schools is to provide a place for kids to go, a purpose to the day, and a way for working parents to balance their work and home lives. Are we prepared to contemplate the same role that our current employment model plays for adults as well, and how AI might disrupt it? Perhaps there is some future stable state where we’ve adapted to mass automation (both physical and intellectual), but the frictions of the transition period should not be overlooked.

Greg G

Mar 27 2023 at 10:00pm

I thought the discussion of AI achieving what we previously believed were significant human accomplishments, such as writing and art which we are now coming to understand aren’t actually “that” complicated and aren’t uniquely human skills, was interesting. It seemed to me the discussion then suggested AI achieving consciousness was unlikely. I was hoping the conversation was going to delve more into the notion of whether or not consciousness is ultimately, similar to writing or art, not that complicated. Perhaps it is simply a feedback loop within our minds which can be replicated in AI – though it isn’t evident to me what benefit that would create in an AI.

Dan A.

Mar 27 2023 at 10:05pm

Thank you Russ and Kevin for a deep conversation on this issue – the notion that AIs are functionally aliens that might elevate humans out of some of our own feedback loops is an intriguing insight.

Regarding whether AIs may succeed humans however, I believe the salient point isn’t whether they will engage us in a form of warfare, or physically prevent us from unplugging them, but rather whether we _would_ unplug them. We have not unplugged Twitter, for example, despite its dubious contributions to societal well-being (let alone its current state of management disarray).

Far more likely than a “SkyNet scenario”, wherein we are engaged in conflict with autonomous cloud-powered robots, is a continued degregation of human agency and purpose. We are only beginning to contemplate what a world looks like where much of human input simply isn’t needed. What pathways through life are possible when our traditional course of schooling, standardized tests, higher education and productive employment are no longer relevant?

We should all have learned throughout the Covid experience that a large portion of the value of in-person schools is to provide a place for kids to go, a purpose to the day, and a way for working parents to balance their work and home lives. Are we prepared to contemplate the same role that our current employment model plays for adults as well, and how AI might disrupt it? Perhaps there is some future stable state where we’ve adapted to mass automation (both physical and intellectual), but the frictions of the transition period should not be overlooked.

Scott

Mar 28 2023 at 10:01am

I wish Russ would have pushed him a bit more on the social media point. I found it a bit strange that someone who wrote a book of aphorisms was suddenly demanding evidence that staring down at your phone all day was worse than the alternative. I think the really obvious answer (just turn it into a good aphorism) is, YES, yes it is worse. And the alternative to staring at a phone on a bus is not mindlessly staring out into space. Throughout the 80s and and 90s people had magazines, novels, conversations with strangers, GameBoys, etc. And I think all of those things are better than an endless stream of news and memes and hot-take opinions.

Bob Anderson

Mar 28 2023 at 1:48pm

I was struck by the similarities between Mr. Kelly’s rejection of evidence that social media’s pernicious effect on girls and Walter Freeman’s insistence that lobotomies are sound medical treatments.

Dan Snigier

Mar 31 2023 at 9:02am

Yes, almost as though humans cant voluntary do things that harm them.

Shalom Freedman

Mar 28 2023 at 3:05pm

Kevin Kelly is certainly a man who has listened to the sound of his own drummer. His ‘distillations’ or pieces of life-wisdom clearly come out of his own unique way of acting and thinking in the world. As life-advice I have no doubt many of the thoughts can be of value and help, though they do not seem in the examples given to touch on the deepest of life’s questions, and approach the profundity of great aphoristic thinkers like his hero Thoreau, Emerson, Kafka, Kierkegaard, Nietzsche, Pirke Avot, many others. Incidentally it is the journalist James Geary who has done the most extensive work on the Aphorism as a form and this work is condensed in his ‘Guide to the World’s Great Aphorists’ though my personal choice for the best collection of the form is W.H. Auden’s and Louis Kronenberger’s ‘The Viking Book of Aphorisms.’

The second and more interesting part of the conversation was Kelly’s and Russ Roberts discussion of AI and its meaning for the future. Here both seem to me to be at the top of the game in knowledge of the subject and original insights. The ‘pull the plug out’ answer to AI’s taking over is simple, humorous and something I have vaguely wondered about, but not heard elsewhere. Their criticism of the idea of the ‘singularity’ the Ray Kurzweil, Nick Bostrom et. al idea that once a certain point is reached Intelligence will expand exponentially, made me wonder how I have been simple-minded in not really questioning this idea. The idea of the multi-dimensionality of Intelligence put forth by Kelly and endorsed by Roberts also seems to me now obvious and connects well with the Howard Gardner idea of different kinds of human intelligence. However, when they come to talk about chatgpt, it seems to me Kelly errs in talking about creativity and especially creativity in writing as a low-grade skill. There are after all so many varieties of Writing. I don’t think the writing of ‘Hamlet’ and “War and Peace’ are simple easily reproducible low-grade activities.